In 2005 the UK Treasury sponsored a conference with the title [amazon_link id=”023001903X” target=”_blank” ]’Is There a New Consensus in Macroeconomics?[/amazon_link]’; a book of the conference was published in 2007. Its answer was ‘yes, but….’, the ‘but’ being – correctly, as it turned out in hindsight – the flagging up of some puzzles and controversies as well as shortcomings such as the absence of international flows and imbalances in the conventional model. At the time this sense of consensus was (by definition) widespread – Olivier Blanchard at the IMF famously wrote about it too, in The State of Macro.

[amazon_image id=”023001903X” link=”true” target=”_blank” size=”medium” ]Is there a New Consensus in Macroeconomics?[/amazon_image]

Earlier this week, the ESRC sponsored a symposium on macroeconomics hosted by the Oxford Martin School, with the aim of evaluating the state of macro now. As the ESRC’s Adrian Alsop put it in his introduction: “While in the economics profession, macro is but a sub-set of what we do, any perceived lack of vitality and strength in macro tarnishes economics as whole in the eyes of citizens and policy makers alike; and that in turn causes reputational damage for the whole of social science. So this is big stuff the profession, let alone the funding agencies.” Internationally, funding agencies are considering what kind of research in macro is needed – and particularly in the UK, where this is an area of economics in this country flagged up as weak by a 2008 benchmarking study (scroll down) for the ESRC, led by Elhanan Helpman.

My headline from the symposium is that macroeconomists are deeply divided, with any sense of consensus shattered. There is a division between those who regard increasing the sophistication and flexibility of existing models and approaches as an adequate response to the crisis, and those who believe a more far-reaching re-tooling is essential for both scientific and public policy credibility. This is more or less the same as the division between adherents to DSGE models, or more broadly a deductive equilibrium framework that uses a small number of aggregate variables to make analytical predictions; and those who believe macroeconomics must now become more inductive and data-based. As Professor Neil Ferguson, Professor of Mathematical Biology at Imperial College, put it in his comment on day 2 of the symposium, he was astounded by how little macroeconomists discussed data and the new techniques available for handling large amounts of data.

When this division between deductive and inductive approaches, between parsimonious analytical models and computer-based statistical techniques (agent-based modelling, econo-physics, statistical exploration of the data) surfaced, the discussion grew a little heated. This included the breaks: many of those who disagree with the prevailing, albeit broken, ex-consensus feel unable to bring about change even in their own research, and are not eager to speak out or change the nature of their work because it will harm their career prospects. To advance in UK economics departments requires publication of numerous articles in a small number of American journals which are firmly sticking to the conventional modelling approach.

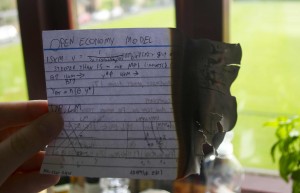

My view is that if macroeconomics does not abandon its obsession with being able to write down analytical 3 equation models with maybe 12 or even 20 variables to explain and predict what is happening at large scale in the economy, it will lose all meaning and purpose. Below is a picture of my son burning his macroeconomics notes as soon as he’d taken his final exam – despite having an outstanding teacher, it seemed obvious to him that macroeconomics was a fairy tale, a fable. Yet many macroeconomists seem not to realise that they are dealing in metaphors, and that IS-LM curves are more like unicorns than Higgs bosons. Microeconomics suffers in the same way but not so badly and applied microeconomists are already a bit more flexible – more willing to use ad hoc rules of thumb derived from behavioural psychology, more willing to use qualitative evidence and business data, not just highly aggregated time series data.

Burning unicorns?

So I was wholly in agreement with Professor David Hendry, who in his presentation on statistical techniques for exploring data, said:

– all macroeconomic theories are incomplete, incorrect and changable

– all macroeconomic time series are aggregated, inaccurate and rrely match theoretical concepts

– all empirical macroeconometric models are aggregated, inaccurate and mis-specified in numerous ways

So why justify an empirical model by an invalid theory that will soon be altered? Why is internal model credibility considered more important than verisimilitude? “It’s why people think economists are daft,” he said. And, as he pointed out, DSGE models are not even logically internally consistent because they incorrectly regard agents’ expectations today of the future state of the world, conditioned on what they know today, as the same as their equivalent expectations tomorrow, bar for an unpredictable error – but this would only be true in a stationary world. When the state of the world can change in a non-stationary way between today and tomorrow, the kind of ‘model-consistent’ or rational expectations conventionally used are not possible.

I hope the ESRC and other social science funders will focus their efforts on enabling the reformist macroeconomists to pursue their alternative approaches. None of us knows what approach to macroeconomics will ultimately prove most fruitful, but at present given the institutional structures in academia, none of the alternatives are being pursued. Academic economists who have spent their careers doing everyday macroeconomics will need 2 or 3 years to change direction and learn new techniques and approaches to data. But if they want to keep their jobs, they will not get the space to do that – they will need to publish another tweak on a DSGE model in the American Economic Review.

Following the conference earlier this year that resulted in [amazon_link id=”1907994041″ target=”_blank” ]What’s The Use of Economics[/amazon_link], a working group hosted by the Government Economic Service has been considering the institutional barriers to reform of the undergraduate curriculum, and we will report next year. A parallel consideration is needed of barriers to reform in research, and I don’t excuse microeconomists from the need to think deeply about their subject, but it’s more urgent in macroeconomics because of the crisis.

I’m no economist but it’s apparent to me that some of the overriding conditions of the modern age – climate change, resource depletion, age-demographics, externalised costs coming home to roost – are absent from economic theory which manifests itself largely in terms of money and profit.

A paradigm shift in this discipline is *demanded* if homo sapiens is to salvage a future for the species and the planet we rely on for survival.

You’re a bit harsh – there Re good e onomists thinking about subjects like that. Trouble is – and here I agree with you – all that work is in a separate silo from the macro guys who claim to be studying the big picture.

So, how’s skintnick harsh, when you admit that “…the macro guys who [only] *claim* to be studying the big picture.” Even just re: the etymology of ‘economics’, the integral view to include the externalities as reality is clear. The word is derived from others with meanings to include: manager, steward,” from oikos: The sense of “wealth and resources of a country”.

So, although curious about “harsh”, you seem to resonate with the very important idea of the need for behavioral psychology to be integrated into the equations. Bravo! Thanks for the great article!!

Oh, ‘harsh’ just because I took skintnick to mean that no economists look at such subjects when there is actually lots of work in environmental and natural resource economics, demography, economic geography etc.

Here’s some resources on agent-based macro:

http://www2.econ.iastate.edu/tesfatsi/amulmark.htm

Acemoglu et al’s paper on network approach was published in Econometrica, so progress is being made in moving macro away from the failed DSGE structure, although of course the intellectual rent-seekers of US academics will fight it tooth and nail.

Thank you for the link.

Interestingly, at the symposium the DSGE/equilibrium cohort were most interested in agent-based modelling among the alternative approaches because they realised that you can make the agents optimisers if you choose, and because they heard that it is expensive work requiring large research grants…. 🙂 They were less interested (to say the least) in network models and data-driven computational approaches.

“… he was astounded by how little macroeconomists discussed data …”

As a micro guy, this is something that has struck me even in my student days. And something I have heard repeatedly for many years from other micoeconomists, but never from a macroeconomist.

In other words, it’s obviously true.

Indeed. Even worse, many of them have such a narrow conception of ‘data’ – i.e. officially collected time series statistics.

What data should people trying to understand the macroeconomy being look at, that they are not already? What alternatives to official time series?

for example, suppose you are trying to understand how changes in government spending affect unemployment during recessions. Looking at microdata, on all government projects or whatever, even if it is “big” with thousand upon thousands of observations on spending behaviour of workers, orders for firms etc., is useless because the whole point is that there may be offsetting changes elsewhere. Is there some data set with fine-grained observations on all firms and individuals that is going unexploited by economists?

Why should macroeconomists be investing time learning techniques for handling “big data” – what’s the “big data” they are supposed to be looking at?

Official time series statistics are not descriptors of natural objects, but socially-constructed aggregations in specific (and complicated – eg seasonality, hedonic pricing, PPP exchange rates) ways of underlying data, mostly collected from surveys of individual firms, households etc. Computers mean it is possible to use the survey data directly. Some researchers are now using these data, but most macroeconomists do not. In my PhD thesis, I found that even using industry-level production data made it immediately apparent that macro-labour market models using macro-level aggregates did not have any validity at lesser levels of aggregation.

Thanks for the response.

So if I am interested in understanding the impact of changes in government spending on unemployment, I should be using the underlying data from which official time series on government spending, unemployment and output are constructed, should I? Are these data really available?

This is a very interesting discussion. In my field (culture) there are tracking projects but it looks like we are at least 5 years away from having enough data to make a real impact with them – and the data inherently needs interpretation, so it’s a different beast. However, I think this question of interpretation is important for economics too.

If I were to sketch out a caricature of the crisis, I would say that one distinguishing feature was that there was an asset price bubble. And that the “undistinguishing” feature of mainstream macro (DGSE perhaps?) is that it was not able to notice this before the bubble popped. (Many macro-economists did notice this, but few of them are in positions of influence.) I can defend this caricature, but a comment isn’t the place for that. It also doesn’t speak to why this was occurring, but again that’s maybe a bit long for here – and while if they’d spotted it not knowing what to do might have been the next problem, the first problem was spotting it.

Pre-crisis I was in a heated discussion with a macro economist about the problems with the way inflation was being measured. The irony is that his defence of “core inflation” as a measure was a good one – and I’d argue applies well to current circumstances (where e.g. VAT rises are causing misleading headline figures). Still, whilst good, I feel the discussion highlighted the point – there was no measure being taken by a lot of modellers (many using DGSE) that could point them to the problem of an asset price bubble.

And for me, this is much more key than “big data” or micro-economic approaches. The key challenge in modelling is to keep asking if something in the system is not being modelled. This is not just about data, or simulation, it’s also about having a sense of what is too important to leave out. And the culture of mainstream macro, encouraged by certain trends in AER and other journals has been to deny that bubbles even exist. (Market price is the market price, after all.) And I think that’s where the problems are rooted, many people were encouraged to be blind to a certain kind of issue, for philosophical/methodological reasons.

Yes, I agree with you. I think David Hendry would argue that his approach would flag up missing variable problems immediately.

You seem to be implying that macroeconomics is in severe disarray because of the existence of the crisis. Is the problem that the crisis wasn’t predicted by standard macro, or that it can’t be rationalised using standard macro?

If the former, no model is a crystal ball and poor forecasting performance is by no means limited to DSGE modelling. So don’t get your hopes up for networks or agent based modelling.

If the latter, I’d agree with Paul Krugman that standard IS/LM analysis (i.e. the stuff your son is burning) has done remarkably well in explaining the economy during a liquidity trap, making predictions that have stood up to the test without resorting to microfounded models with all the bells and whistles. But you seem to think it’s all rubbish, without actually explaining why (other than pointing out that we’re in a crisis). Perhaps you’ve covered this in another post.

On the one hand, you give the impression that DSGE modelling completely dominates academic macro, but on the other hand you say that “macroeconomists are deeply divided”. Well, which is it?

Your point on data. It’s ironic that you suggest that macroeconomists should look to various alternative data sources in the context of DSGE modelling, where standard calibration techniques which actually do use various sources of data are criticised precisely because of that. What other data did you have in mind?

I don’t think your characterisation of the publication process is accurate either. Do you actually think that a paper will be rejected simply because it’s not DSGE, even if it were demonstrated to outperform DSGE? You saw the previous comment about the Acemoglu paper in Econometrica. It’s probably not the only case. Never mind that the stuff by Kydland and Prescott that got the modern DSGE movement going in the first place was a massive break from tradition and is still considered controversial! They were awarded the Nobel for their work – not quite the rigid establishment that the post conveys.

So what is it, precisely, that you think makes DSGE models so useless? And how are those weaknesses going to be improved upon by other research programmes? That’s the interesting question. Although I’m not quite sure what you mean by an “analytical 3 equation model with 12[?] variables to explain”, you speak of maintaining tractability as if it were a weakness. DSGE models are very empirically oriented (unlike what the post suggests (unicorns? really?) and unlike a lot of other work in economics) and you can get a lot of mileage from them in terms of explaining the data with reasonably tractable models.

Besides, even medium scale DSGE models cannot be solved analytically, and it quickly becomes difficult to back out the intuition. In fact, (relative) analytical tractability would be one major advantage that DSGE modelling would maintain over more computationally intense methods. Taken to the extreme, eventually you could have a model detailed enough to simulate the world. But then it ceases to be a model in the sense that it tells you nothing about how the world works.

And about your point on rational expectations – even within your own definitions, how is it “logically internally inconsistent” if stationarity is assumed? You can argue with the assumption, but you can’t say the model is internally inconsistent because the world is actually non-stationary. Or are you saying that DSGE models which assume non-stationarity should not also assume rational expectations?

I don’t mean to sound like I think we shouldn’t challenge prevailing thought, or that agent based modelling cannot outperform DSGE models. I think you’re absolutely right in that regard. I don’t like DSGE modelling in its current state either. But I do think you misrepresent the picture, especially compared to micro.

Remind me again how much predictive power there is in Mas-Colell, the standard one thousand page micro textbook that doesn’t contain a single fact? I can’t wait to see the bonfire your son is going to make out of it =P

Ah yes, well having had the misfortune to have been taught a course by Mas-Colell, I am with you 100% on that – I didn’t understand any of it! My relative preference for micro is for the pragmatic applied stuff, and the jobbing (micro-)economists I know are all cheerful about using ad hoc rules of thumb or behavioural quirks. We care less than (some) macro-economists about micro-fundations, to generalise again.

Reading your comment, it seems I over-simplified for dramatic emphasis. The point about the crisis is that it highlights the inadequacies of macroeconomics so thoroughly, not that it contributed to them. The division is real but the conventional mainstream has a grip on institutional processes, so it is hard for younger economists to pursue intellectual alternatives – a surprising number of academic economists, even eminent ones, have complained to me personally about how powerless they feel, about the intellectual tyranny in their departments, about the way good economists are only getting offers from other departments such as human geography etc.

I barely managed to emerge alive from a single seminar… I can’t imagine what it must have taken to survive an entire course!

That’s interesting… Thanks for your reply. Perhaps a little dramatic emphasis is needed.

Keep us posted!

I often read that the crisis was “impossible” in DSGE models.

this is an odd claim. Most DSGE models just assume exogenous shock processes, and anything is “possible” in that framework. You can interpret those shock processes however you like. You can either explicitly model a financial sector that gets hits with shocks or in a simpler model you could interpret a productivity shock as a shock to the finance sector.

I think what this criticism is getting at is that DSGE models have not tried to capture what you might think of as endogenous shocks, something like the Minsky story, things gradually getting bent out of shape and then suddenly snapping.

Strikes me that people are confusing what DSGE models can do, and what they happened to be used for. As a general rule, any time you claim “economists did not do X” you will be able to find some economists how did in fact do X, but certainly mainstream, high status DSGE economists did not produce models with endogenous credit bubbles and crises.

Now plenty of people are trying to do that. So I’d withhold judgement on what’s possible in a DSGE framework. DSGE can incorporate departures from rational expectations.

as to the tyranny of methodology, well I don’t have the experience to comment, and I have been told that computable agent based papers have a hard time getting accepted anywhere high status, but then again, I have seen examples. Of ACE, and other frameworks, like network stability stuff like this

Pingback: Why a top monetary economist is wrong | The Enlightened Economist

Pingback: Austerians, Stimulards, Krugmanites and history | The Enlightened Economist